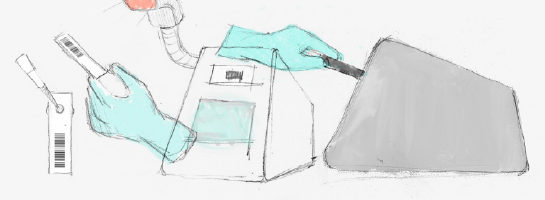

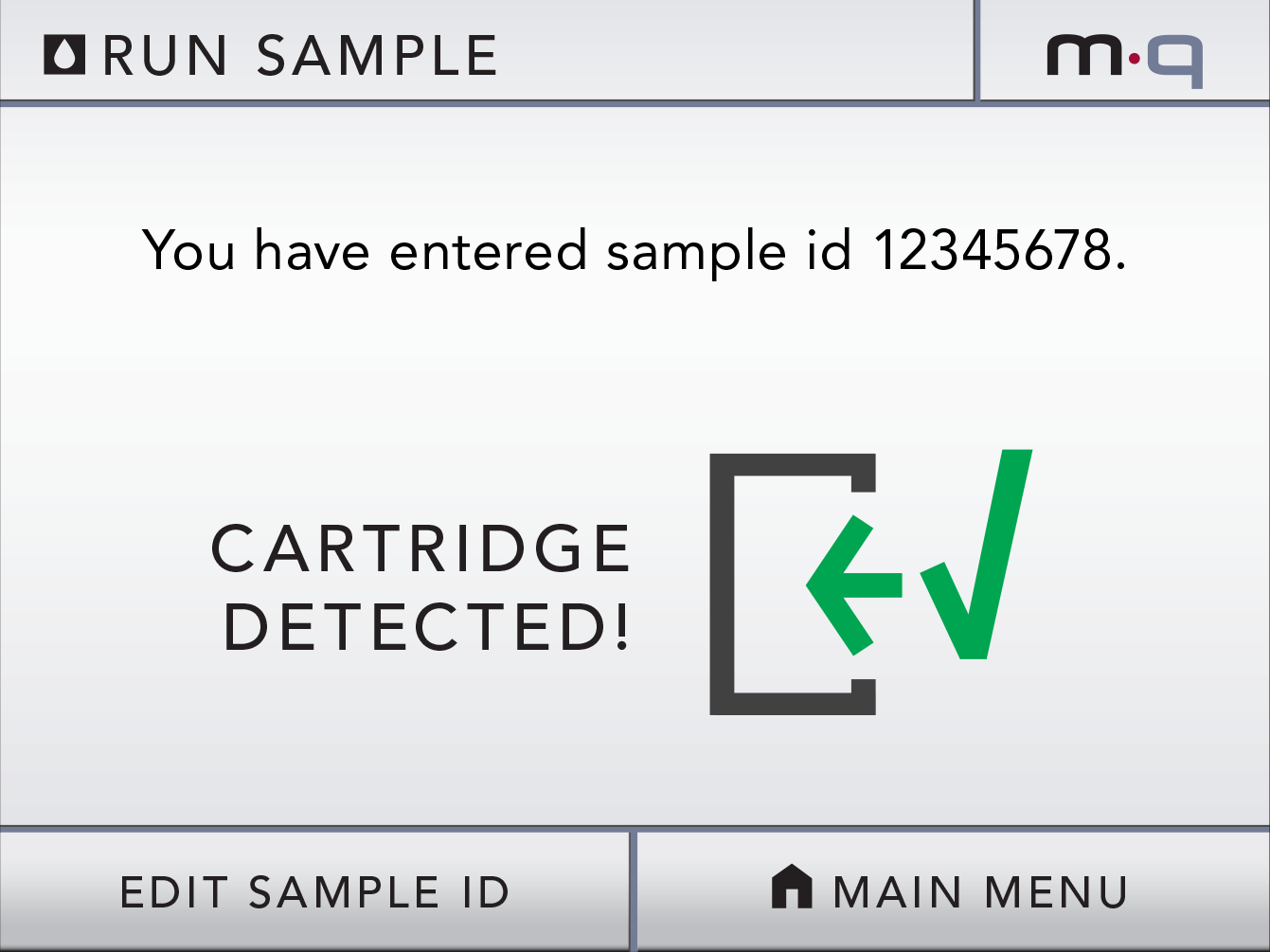

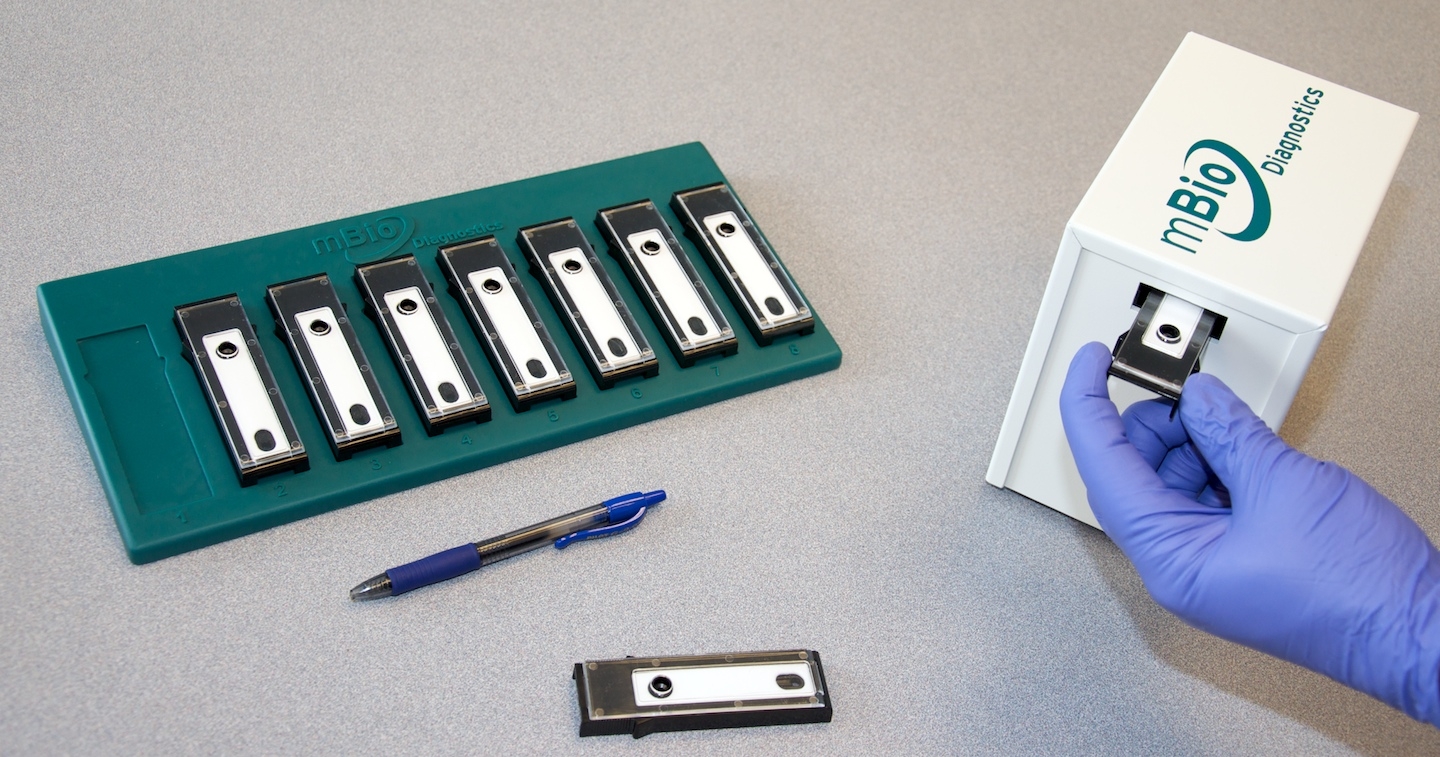

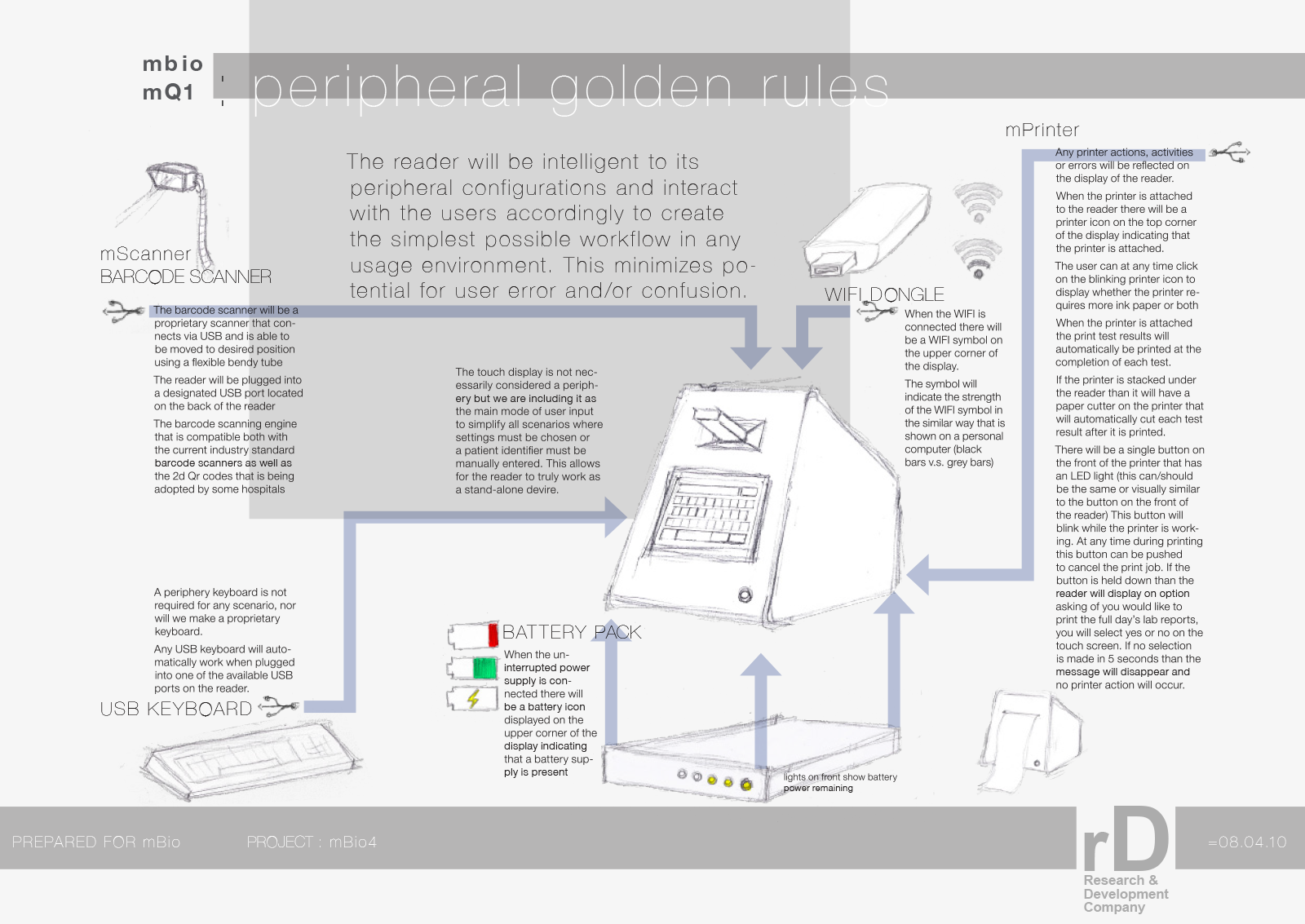

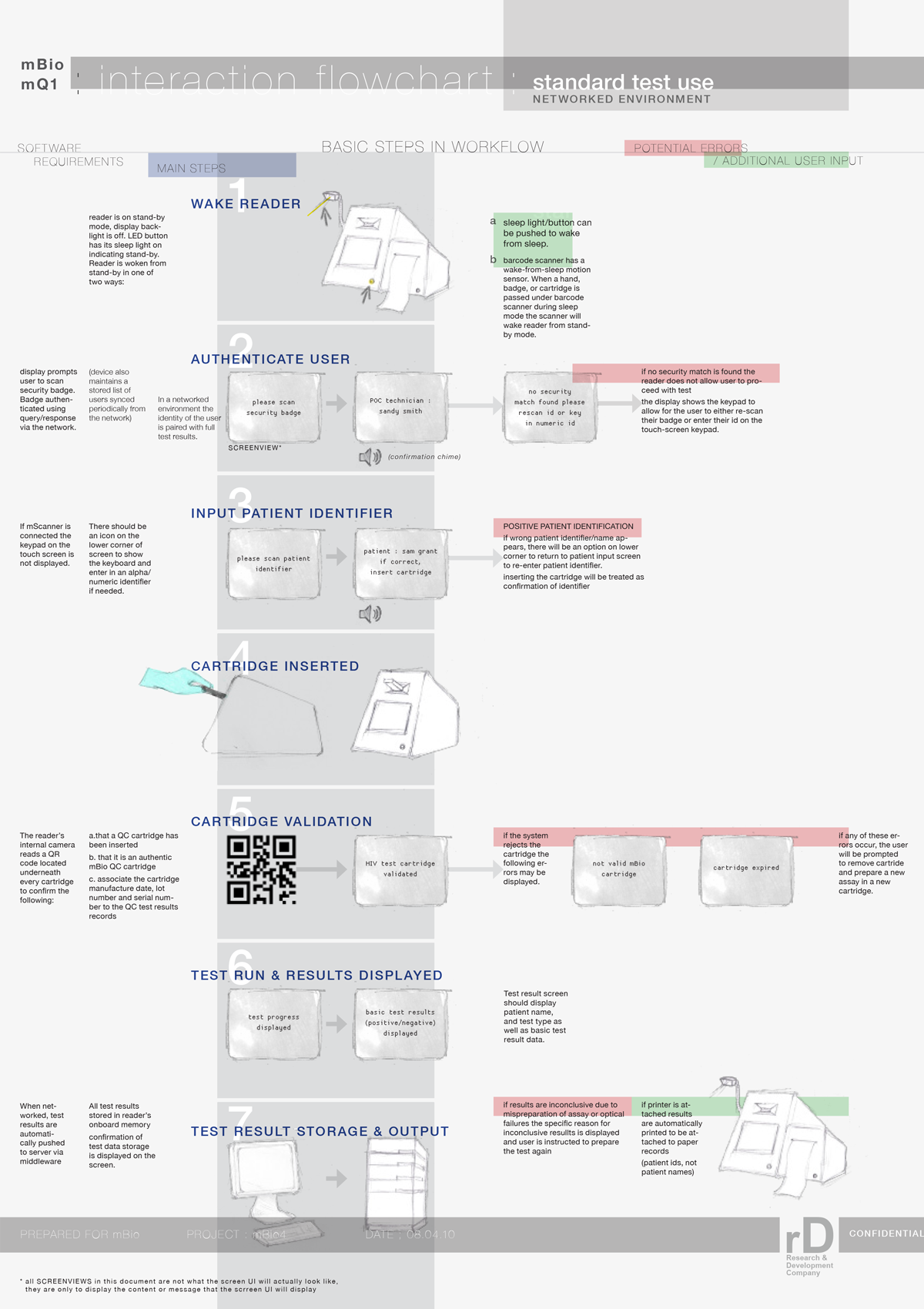

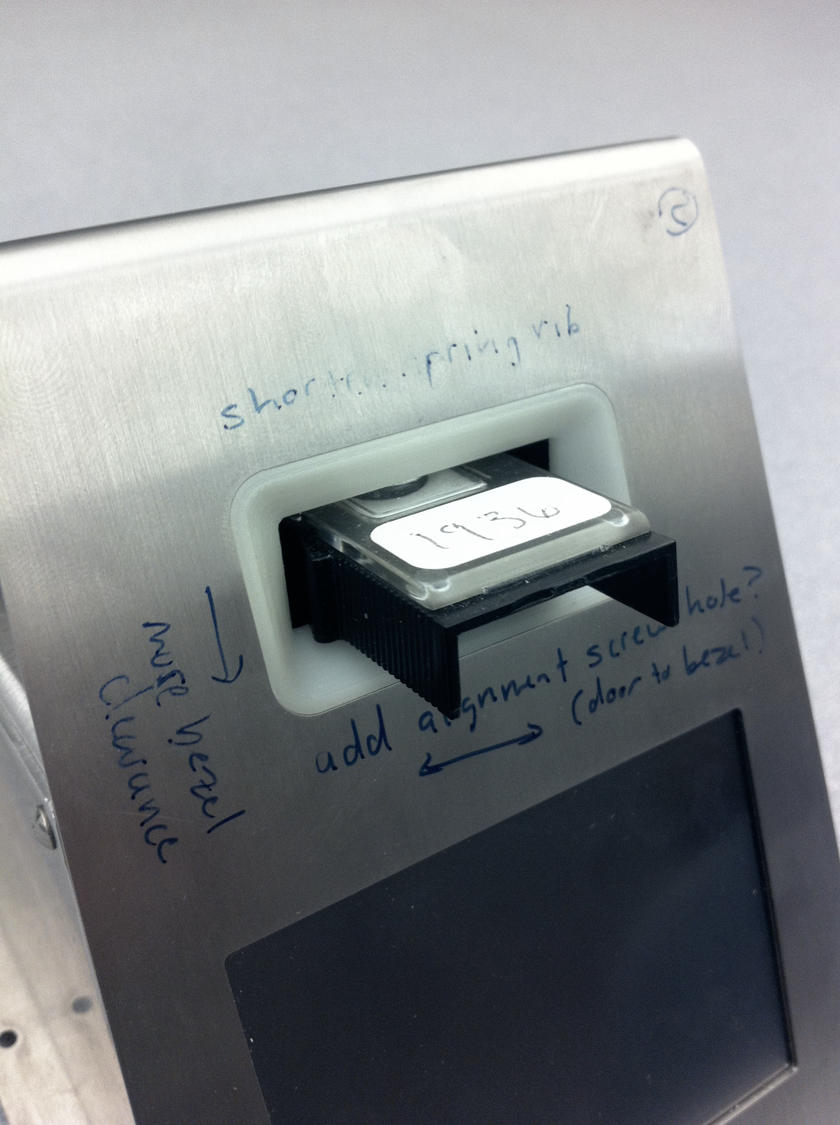

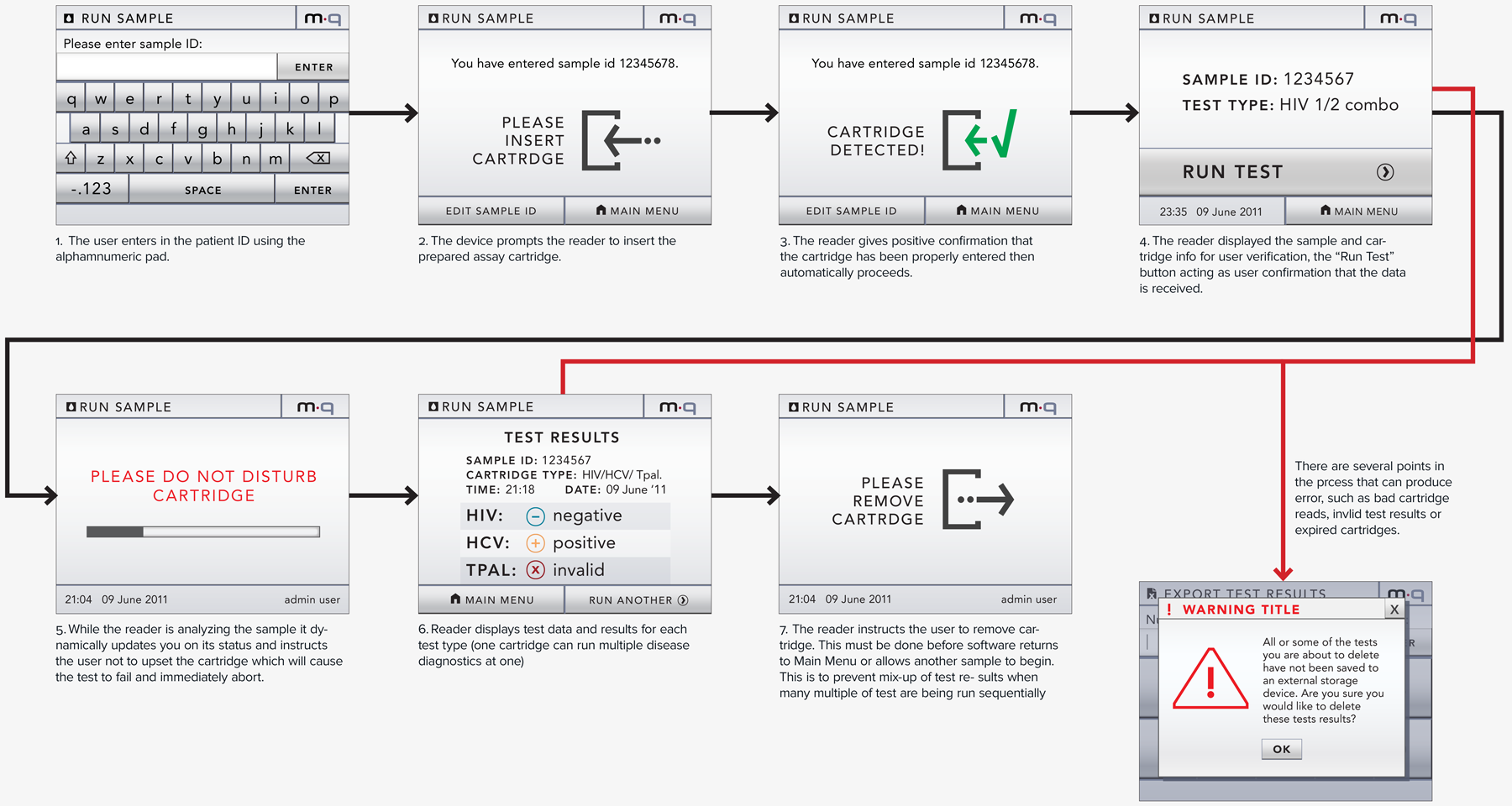

Our client (at Seamless Product Development), mBio incubated and productized disruptive medical diagnostic technologies that have completely changed how infectious diseases such as HIV and Syphilis are diagnosed. Their new technologies include a proprietary laser-optics assay reading process and custom software algorithms which enable affordable, rapid, and reliable diagnosis of infectious diseases. By combining their technologies they have been able to create a diagnostic device for HIV and Syphilis that will cost only around three thousand dollars per device with a form factor barely larger than an electric pencil sharpener. This is ground-breaking when compared to the car-sized million dollar machines currently in use for reliable diagnosis of HIV.

This significantly lower price-point and physical size of their device will allow reliable testing to be more readily accessible to developing nations, urgently in need of these advanced medical technologies to prevent the rapid spread of infectious diseases such as HIV.